Neurips 24 Takeaways

SOTA #6: Ilya dropping a bomb, is Europe doomed and new M$ rewards for better benchmarks

Last week I attended NeurIPS in Vancouver. Besides the super fun catch-ups with all the European (and French) researchers, I could have met here - but it’s, of course, much better with a +9 timezone difference; the week was packed with data on where the AI SOTA is heading.

So here it is, a little synthesis just for you, my dear readers. As you might know from my previous posts, I cared specifically about three topics while attending the conference, and this might have skewed my experience a bit:

Transformers x tabular data - with Gaël Varoquaux and Frank Hutter pushing the boundaries of what's possible [incoming post]

Advanced program generation for math / software, for example combining MCTS and RL as making programs is just not the same as making code [> article here]

Alternative to transformers for AI reasoning capabilities - alternatives to Transformers, System 2 architectures, Neurosymbolic stuff, etc. [> article here]

You will find a scientific article selection at the end of the post; let’s get started with the takeaways:

Neurips TL;DR

Let’s say… tensions in AI development are bubbling furiously to the surface.

1/ The AI Reasoning Schism

The main takeaway, to be fair, was that we are witnessing a phenomenon that I would have to qualify as a religious divide between two factions, because it’s really about faith at this point.

The "West Coast optimists"—predominantly US-based people/teams—believe that AGI is just around the corner, or at least that it requires “one more push” on existing methods. They're all in on LLMs demonstrating true reasoning, holding grokking, and test-time compute as the proof that generalization is the premise to actual reasoning. “You just need more data is all”

On the other end, the "European heritage skeptics," led by François Chollet and Ilya Sutskever, argue that we're mostly seeing sophisticated pattern matching rather than true reasoning. They're pushing for system two reasoning or fundamentally new approaches (for what it’s worth, I lean strongly this way).

Ilya made the headlines with his talk at the end of the conference, basically arguing that scaling laws are over:

However, the funding landscape is *dramatically skewed* towards our maximalist friends. >$200B backing the optimist camp [estimate of the Big Tech spend + core AI VC funding] versus < $2B for alternative approaches. I find this asymmetry fascinating - the alternative camp right now is just not funded well enough to prove anything and must be 100x more capital-efficient to have the right to succeed.

Well for now :)

It’s a religious chiasm as well within VCs. Some firms, like Benchmark, take positions that are well known to be leaning AI skeptic and have decided to wait out the first wave. Other firms like Sequoia have adopted a “back them all” approach, and you will find them in every possible visible Core AI company cap table.

You even see this divide inside partnerships. At [one of the largest VC firms], they are lucky enough to have two quite technical VC partners on the team. One is an LLM maximalist, and the other is a true believer in System 2. I'm sure this makes for interesting investment committees.

2/ The Compute Destitutes

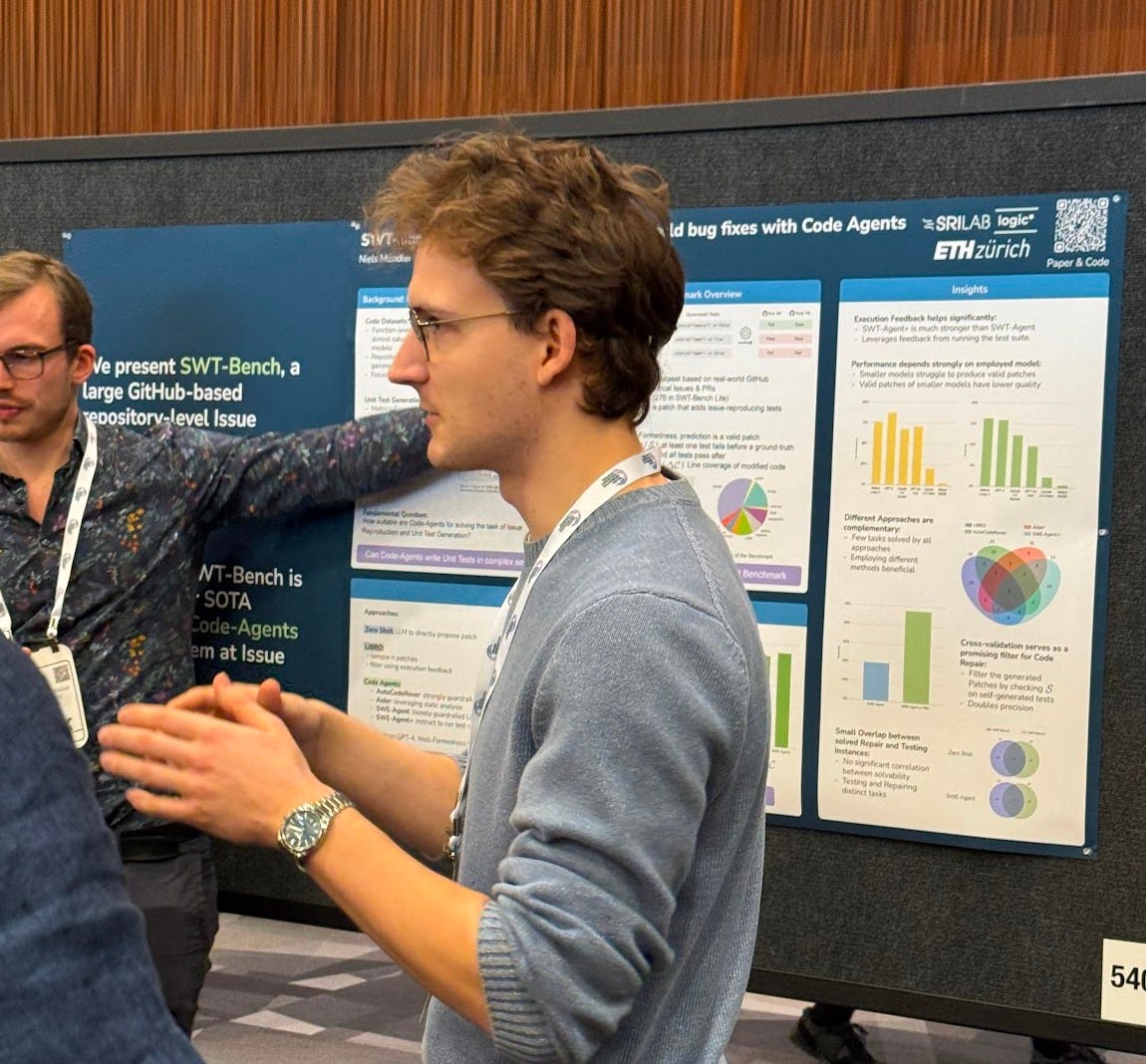

Ok, ok duh, but this slapped me in the face during the week repeatedly: groundbreaking AI research increasingly comes from a handful of compute-rich organizations. DeepMind (overwhelmingly) + other Google orgs, Meta (notably people from FAIR), Microsoft, Apple, and well-funded academic powerhouses like Carnegie Mellon (194 papers presented, insane), Stanford and MIT are setting the pace. From Europe, ETH (and their Bulgarian friends INSAIT) and the Max Planck Institute both secured super strong industrial partnerships and computing: they were the only two academic European research centers with papers that gathered crowds around multiple papers.

More quiet? The traditionally strong European academic institutions - where were the Oxford, Cambridge, TUM, and the French research centers? There, sure, but not on the front scene.

The uncomfortable truth is that meaningful insights into large models require deep pockets and hands-on experience with large scale AI in production. Which you can only get when… you have large models in production and can play with them, learn how they behave, and get hands-on experience of how to scale them. In most academic places, the budget to do this is not attainable.

Some of them found alternative branches to explore, but as the main attraction of the year is scaled transformers, they have to retreat to “cheaper” research areas basically and can’t compete on the more visible topics.

It’s too early for these to show, but I'm excited about the potential hybrid research coming in the next years from PhD students who would intern at Mistral and BlackForrest Labs, as it’s the large-scale models that we have here.

Here is the complete data if you care (just rank by deduplicated affiliation); however, it does not give you the ability to cross-analyze with “people gathered around the poster and were wowed.” For this, you had to attend.

3/ The Benchmarks are Poisoned! (now a mainstream information)

The conference highlighted a critical issue in how poorly AI systems have been evaluated. There is now a consensus that traditional academic benchmarks have all been compromised (read: used to train large models), leading to some exciting developments:

François Chollet dropping ARC-AGI V2 (can’t wait) and the V1 leaderboard technical report

Andy Konwinski, the founder of Databricks and Perplexity, now a VC backing technical founders from the Bay is launching a new SWE-bench with a $1M prize pool and clever anti-poisoning measures modeled on ARC-AGI

This could finally force LLM makers to confront their models' real limitations rather than chasing potentially compromised metrics. Maybe we'll start seeing industry expectations align with practical experience - no more "90% on benchmark X but struggles with basic tasks."

For context, OpenAI o1 hit only 20% on ARC V1. V2 should reveal more about true reasoning capabilities, and I'm grabbing popcorn when those results drop on these new “very good at reasoning” transformer models.

***

Well, this was my takeaway from Neurips. If you attended, what were yours? I'm keen to hear in the comments or via DMs!

Also, LLMs have implicit world models. Isn’t that super cool ?

Love,

Marie

PS: I’m organizing the third edition of my own conference (virtual + free) on Jan. 17th to discuss the early GTM of AI products with the first commercial hires from Mistral, ElevenLabs, and PolyAI, you can register or find out more here: binarystars.org

PPS: Here is a cute selection of the papers I liked (I will update the page as I review my notes!)

PPPs: obligatory Optimus pic from the Tesla stand. That thing is creepy.

PPPPs: Wayve’s booth, super crowded!