Hello friends, I’m Marie Brayer, GP at Fly Ventures, inception stage VC for technical founders solving hard problems. I spend a lot of time on the state of the art of the things that I like, and this turns into pages of convoluted exploration, with scientific articles, insider quotes, and memes. Enjoy!

SOTA #1 - The AI Developer

Today we'll discuss GitHub Copilot, StarCoder, Devin, Magic, Ring attention, MoE and Meta CoT, and why I’m betting on a hybrid approach.

I initially wrote this for myself so fair warning that this is not digestible and probably way too long. I’ve however chunked it into little self-contained pieces:

1/ What is software and what do SWEs do? A detailed explanation of what software is and what software engineers do.

2/ The “Copilot” category: a discussion about the "Copilot" category which is about writing code and auto-completion of code.

3/ What about the Code Generation models? Is there a VC thesis in there? An investigation of the potential for venture capital in the area of Code Generation models.

4/ If writing little bits of code with AI is no longer cool, what about higher-level value propositions? an exploration of higher-level value propositions in the field of AI coding and the technical limitations to achieve them.

5/ The issue with the 10M context window: a discussion on the challenges that come with larger context windows in AI coding.

6/ Tackling The Context vs Speed Problem: putting a light on the product design issues that comes with bigger context windows and inference time limitations

7/ Copilot Workspace vs Devin: an analysis of Copilot Workspace and Devin, two platforms for AI-assisted coding.

8/ OK, but what about generating software from a prompt? A discussion about the potential and limitations of AI in generating software from a prompt.

9/ Low-level blockers to the “Midjourney of Software”: Identification of the main obstacles in the path towards the model-centric approach.

10/ Midjourney of Software, option 1: the mega verticalized model: Exploration of a potential solution for AI-generated software drawing from Google Brain's breakthroughs.

11/ Midjourney of Software, option 2: the Hybrid approach: Examination of the Neurosymbolic approach as another potential solution for AI-generated software.

Closing remark: the contenders: Final thoughts on the current leading players in the field of AI-generated software.

1/ What is software and what do software engineers do?

If you have been an SWE before or part of a technical team you can just skip to the next part. For everybody else, let’s recap:

There is a lot of different types of software and everything is always an exception.

Let’s focus here on software that you and I use every day like your banking app or Reddit, not software that pilots autonomous cars or software for low-level GPU optimization. The common analogy that software development is like building a house is a very poor metaphor - if it was as hard and as costly to extend a piece of software as it is to add a new room to a house, then [unless you’re writing critical rocket software] it’s probably a really bad sign!

What is software anyway?

1/ Code and asset files: a shared repository, typically hosted on GitHub (ie a shared folder on the internet), with a bunch of files, in a few given programming languages. In these files, there will be object descriptions, functions, unit tests, parameter files, scripts, system instructions, documentation, flat data files, sometimes binary libraries, media files… The size of a given project repository truly varies a lot: git cloning Tensorflow leaves you with a massive 10Go folder, React on the other hand is 5kb

2/ If it’s stateful (=has a database attached), one or more databases with the data captured by the program

3/ An ecosystem of third-party tools, services, and dependencies (monitoring, CMS, storage, security, external APIs, secret management…)

4/ An executable form / a setup to run it…

the code could be packaged in docker containers, running on a virtual machine from AWS, and orchestrated by Kubernetes

if it is Java code, maybe it can be compiled into a .jar, and run on any computer

if it’s a video game written in C++, it could be compiled into a .exe to run on a local Windows machine,

it could be hosted on a PaaS like Heroku or Vercel, to have the complexity of the “running” the code part taken away from the developers),

a small machine learning project with a Gradio interface could be run on Huggingface

a Swift iOS app could be built and run on a local iPhone

a program in assembler or a similarly low-level language could be compiled and run on “the edge”, like on an industrial robot or an electric toothbrush*(ie running on a specialized device “smaller” than a normal computer)

All the OGs here will know that there is an entire Reddit dedicated to running the video game Doom on anything, including smart microwaves and pregnancy tests.

…

Now, what exactly does a software engineer do?

“Writing code” is a small part of the work it takes to deliver proper code. To do so, developers are:

of course writing code (this happens in a dedicated software called an IDE, or in a text editor)

checking the rest of the code to make sure you’re not destroying someone else's work and making sure the code merges correctly ⇒ collaboration /

making sure people can understand and reuse your code ⇒ documentation

making sure you use the same best practices as everybody else ⇒ code review

making sure it does what it’s supposed to do and works in all situations ⇒ testing & QA

making sure it runs securely, and scales properly without using too many resources or causing problems ⇒“DevOps”

prioritizing and turning larger specifications into modular sub-tasks ⇒ management

changing a major part of the stack / a major version of a library / updating to new standards ⇒ migration & refactoring

being aware of the open source landscape around your problem space to see if there are pieces you could adapt… or new technologies and solutions you could adopt to make your product better, learning new tools ⇒ training

observing/meeting customers and users to understand the problem space of the product better and make better decisions ⇒ product

Junior people typically start by … writing code, because it’s the part where you don’t need a lot of experience. After many code reviews with more senior people, they begin to understand the larger product/code base and start having fewer flags on their code reviews. With years of experience, they can start having more and more design decisions, ultimately they can have opinions over design patterns (architecture), tool choices, or full stacks altogether for specific needs, …and maybe even opinions regarding what Linux distribution is better (at this point, they have very long beards).

Now that we have established that writing code is maybe 20-30% of the skillset required to be a software engineer let’s dive into the AI-powered developer productivity landscape.

2/ The “Copilot” category

A lot of work and money has been put into writing code and auto-completion of code. We will call this the “Copilot” category for now. This is today the most mature segment with tools that have been largely adopted, mostly GitHub Copilot. Although this segment has benefited from the advent of the LLMs, there were precursors like Jetbrains (the IntelliJ product line notably) who offered AI-enhanced experience in the IDE before it was cool. A good list and review of the specific players: https://blog.pragmaticengineer.com/ai-coding-tools-explosion/

There are countless testimonies from developers and CTOs that Copilot increases productivity, with a consensus in the interviews of at least “+20%”.

“Copilot saves me time, it’s is good at picking up on repetitive tasks. I do not use it to write code but for all the non-core tasks.” (CTO, team of 10)

“As CTO, I'm still hands-on in the code. Everyone on the team has a copilot license. Started with 5 then rolled it out to everyone. Copilot makes the first write of all my methods and all my tests. It's SO NICE.” (CTO, team of 50)

This 20% “empiric” productivity gain is matched in some of the literature. Of course, GitHub released (of course, positively biased) a study where it measures 20 to 50% productivity gain. The biggest testimony of all is likely the commercial success: today (April 2024), more than 1.3 million developers pay for it, across 50,000 companies.

Some places however have banned the use of Copilot (and copy-pasting from chatgpt) out of many different fears (IP issues, data leakage, possible undisclosed aggressive telemetry…) ⇒ it’s difficult for me at this point to be opinionated on this.

More interesting, some reputed places have forbidden Copilot use because they assumed it would lower their productivity. The logic, which might seem non-trivial at first, is that although it increases code writing productivity …let’s say by 50%…, overall it would decrease the general productivity of the team and generate engineering problems, as code generation is a small part of overall work.

“We are not supposed to commit code that is generated by LLMs. It could be possible to convince our CTO to adopt this if the models were trained on our own code.” - (senior developer, team of ~100)

Supporting this idea, a (negatively biased) analysis done by Gitclear showed that “code churn” was increasing, in alignment with the widespread adoption of AI tools. The causal link is not very well established in their study, but it’s interesting nonetheless

“We examine 4 years’ worth of data, encompassing more than 150m changed lines of code, to determine how AI Assistants influence the quality of code being written. We find a significant uptick in churn code and a concerning decrease in code reuse.”

Another concern discussed in the recent literature is security: ”Pearce et al. (2022) investigated code generated by Copilot for known security vulnerabilities. The authors prompted Copilot to generate code for 89 cybersecurity scenarios. They found that approximately 40 % of generated code snippets were vulnerable to some form of known attack.”

After chatting with Sam Wholley, who was an engineer himself but is now the talent partner for Lightspeed and used to hire engineer talent for the likes of Uber, a more profound analysis emerged:

“Copilot is the equivalent of suddenly having your normal motorways (of code) turn into 12 ways motorways. If you don’t rethink highway ramps and tolls you are in for big trouble”.

By increasing individual output, the AI Copilots highlight the weaknesses of an engineering team. Is the team too junior? Bam- you’ll get overflown with a lot more code that doesn’t pass code review. Is your team organization too top-down (reliant on one mega senior person)? Bam- the leader has to now review 50% more code and can’t handle the load which creates a frustrating bottleneck.

Sam had seen also the opposite: “I have this friend who leads a huge team of extremely senior talented people at [large Enterprise company]. A lot of people ex-Google, Uber, ... She equipped everybody with Copilot AND at the same time rethought all the processes. Notably, she doubled the number of people who were doing code reviews. She told me that with this system, she achieved a massive +70% productivity”.

In conclusion Contrary to the general belief that AI Developer Copilot generates more productivity with junior engineers, they achieve more impact at team-level within the very good teams that can harness faster code production. On less organized teams, more code production leads to short-term gains but generates system-level entropy that highlights the flaws of the organization.

As for the VC business case, I’ll borrow the words of the Pragmatic engineer:

“The “AI coding buddy” space feels already saturated. For organizations hosting source code on GitHub, Copilot is a no-brainer. For companies using Sourcegraph for code search, Cody is the clear choice. For those using Replit for development, the Replit AI tool is the one to go with. The only major source control platforms that don't have AI assistants yet are GitLab and Bitbucket, and this is surely just a matter of time.”

3/ What about the Code Generation models? Is there a VC thesis in there?

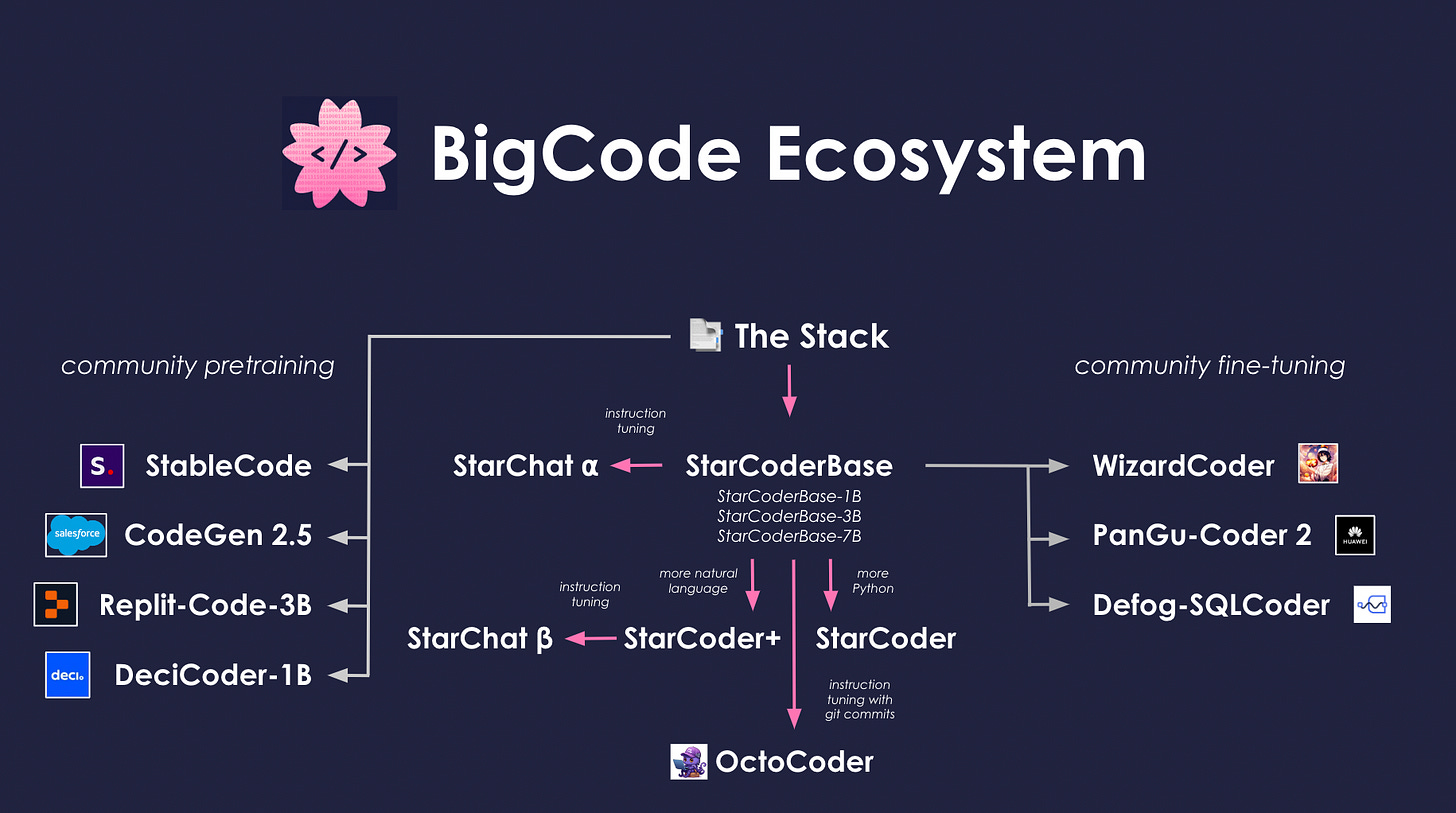

On the model side, you have a plethora of available OSS models with performance close to GPT4 ranging from WizardCoder (Microsoft) to Codellama (Meta) to Starcoder (BigCode). Mistral also recently released their code generation model, Codestral.

To give you an idea of how extreme the effort put into these models is, let’s focus for a minute on the BigCode OSS project (backed by ServiceNow, Nvidia, etc).

We were lucky to get a glimpse of the learning and challenges of this entire training process from Harm de Vries who was one of the leads of the project: ”We crawled the entirety of GitHub and the history of GitHub, plus all the data from a European nonprofit that saves all public code through a partnership. We then de-duplicated them and used this huge 2.9 Tb of code to train multiple sizes of code generation models”

Harm’s team also came up with the exact optimal size for best results at inference.

Is there a wedge here for a new startup in large code-generating models? I don’t think so. The next big idea is to use synthetic data to get a few more percentage points of the relevance of performance, but all the promoters are GPU-rich companies.

4/ If writing little bits of code with AI is no longer cool, what about higher-level value propositions?

We saw that AI is now awesome at writing little bits of code to support programmer in writing functions, setting up templates etc (“the Copilot”). Could this be extended to more complex tasks that require coding skills, but also an understanding of the entire project? Use cases such as:

automated test generation

to do this, you have to understand the intention behind a function or a page of code so that you can test it. You have to grasp what the intended user experience is for instance, to judge if the code is incorrectautomated peer reviews, code quality improvement

to do this, you have to understand the preferred style and coding conventions of the team, and how it adapts to the project, ideally you have to have seen other projects beyond this one to understand the styleautomated bug fixing and PR generation

to fix a bug, you have to understand why it’s a bug in the first place, what does not fit with the rest of the code base. To write a fix (at PR, “pull request”), you have to understand where the fix fits in the entire code base, and it has to be good code fitting with the style and logic of the rest of the project.

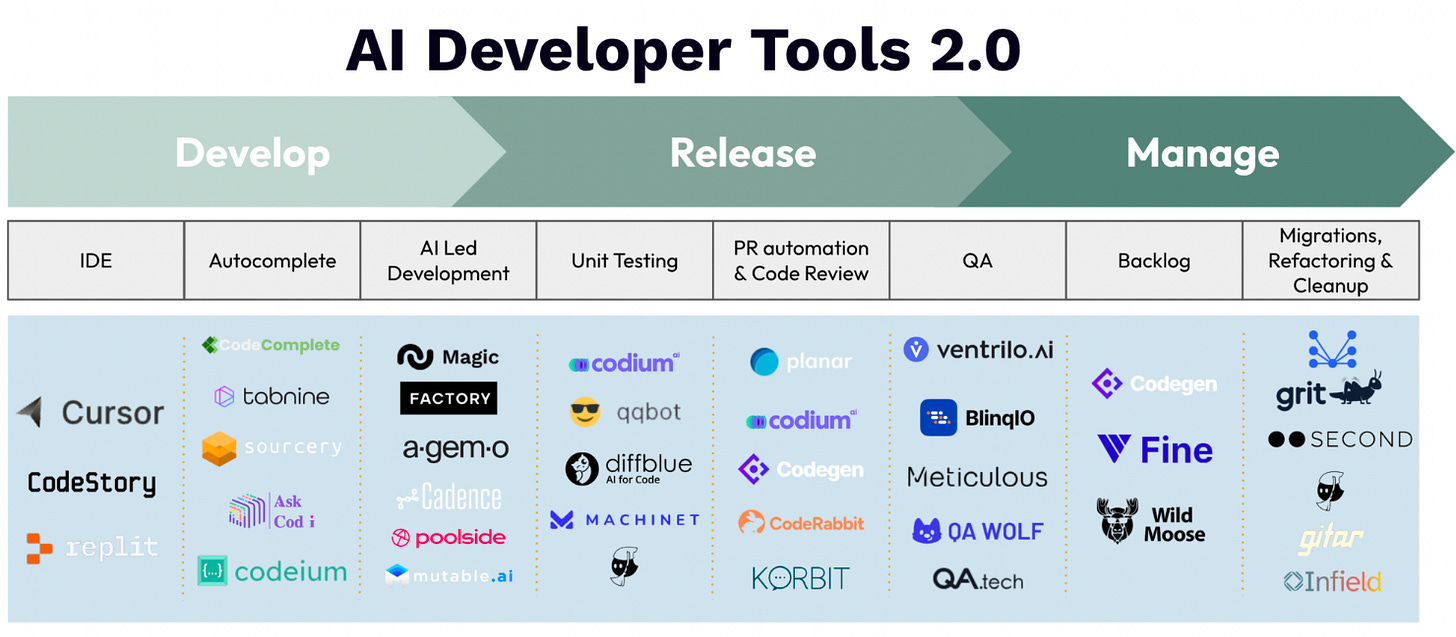

Quoting the Scale landscape again, all these people here are working on these problems (and many more, we meet like one team per week these days):

Let’s call this the Automated Code Improvement category, as an umbrella term.

First, something important that you need to know to understand this problem space: there are of course generally agreed-upon best practices and design patterns, but there is no objective, indisputably better way to write code.

“Better code” does not exist without context (in a given team, for a specific project…).

Startups operating in this space therefore cannot operate in a vacuum, they need to harness the existing code base of their customers. However, they are faced with a powerful limitation of the current LLMs: the context window size.

GPT4 has a 128K context window for instance, Claude 3 is 300K, and the latest Gemini model just recently released to the public boasts 2M tokens. I read somewhere that GPT 4.5 or 5’s context windows would be 8M tokens (don’t quote me on this!).

However is 1M, 8M big enough? How much token is a code base worth?

The size of a codebase in terms of tokens can vary greatly depending on the complexity and purpose of the project. To give you a sense of scale without focusing specifically on tokens but rather on lines of code, which can be a proxy for understanding size:

The average iPhone app might have less than 50,000 lines of code.

A modern car uses around 10 million lines of code.

The Android operating system runs on about 12-15 million lines of code.

Large projects, such as Google's entire codebase for its services, can have over 2 billion lines of code.

For our 50,000 lines of code iPhone app, if the average line has around 50 characters (including spaces), that totals approximately 2,500,000 characters. Using the approximation that 1 token ≈ 4 characters, we divide 2,500,000 characters by 4 to get an estimated token count. This rough calculation suggests that our basic iPhone app would “weigh” 625,000 tokens: a basic iPhone app exceeds the context window of the best current LLM for code (gpt4)

Yes context windows will be bigger in the future, this is a part of the solution. [the next paragraph contains A LOT of technical stuff, skip it if you don’t care].

However, larger context windows will come with new challenges like :

the quality of the answers (cf the super “Lost in the middle” research that shows that like humans, LLMs pay more attention to the beginning and the end of the content)

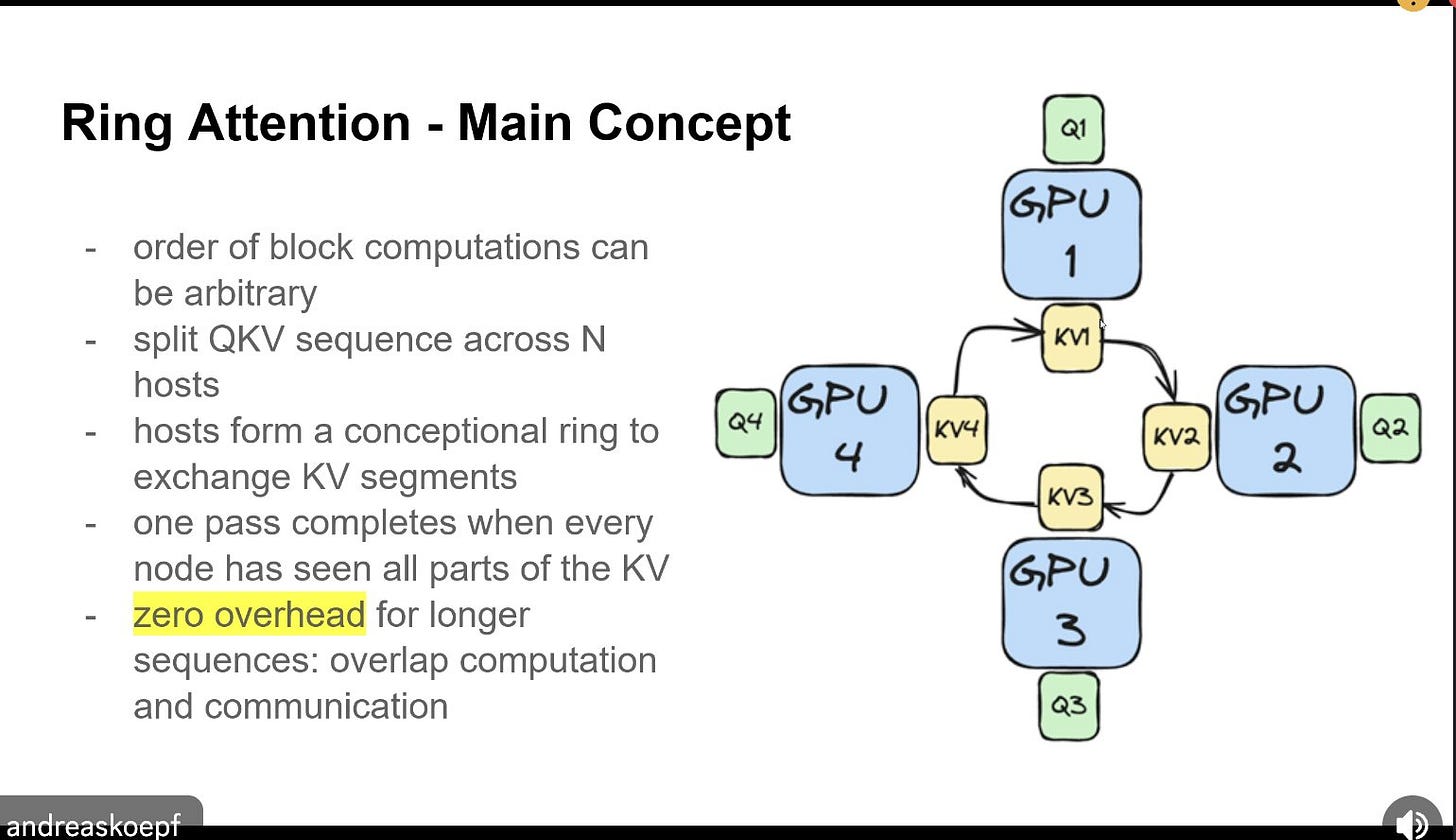

the cost/time to compute as there is a quadratic relation between context window/input sequence and cost of inference: ie. when you input a 10x bigger request, the cost to infer it is 100x. A lot of people are working on making this relationship no longer quadratic (see meta-study here).

5/ The issue with the 10M context window

Recently, GPU-rich people at Google decided to buy and tie together 512 Nvidia H100 GPUs, put them in a circle, and slap some Berkley math on it to create an “infinite” context window that can manage 10M+ token-long requests.

Quick maths with the help of ChatGPT helped me calculate that if you own this monster setup:

with an optimized configuration that turns the quadratic relation between inference time and input into a linear one (possible but very top-tier work), it would take a whole 50 seconds to digest a 10M token request.

each inference request would also cost 17$ to compute if you are a hardware supremacist like Google and own the hardware

So, if every single very large request “costs” 50 seconds, and 17€, you better make sure it creates a lot of value. The average hourly rate for a senior software engineer with around 10 years of experience in Paris is (approximately) €34 per hour.

That’s not how people are going to do better code completion. How are they doing that?

6/ Tackling The Context vs Speed Problem

To be fair, the size of most code projects exceeds the working context windows of humans too, that’s why there are different functions in the human software engineering teams to be sure that critical work is split and manageable.

“I think where humans have an advantage is in their ability to think in a modular way. I don’t need to understand the entire Unity codebase to be able to make a game, because I can get an understanding of the APIs provided and completely ignore the intricacies of how the physics engine underneath works.” - Kamen Brestnichki, co-founder of Martian Lawyers Games and former AI Researcher in code generation.

This also relates to why context switching is so detrimental for software engineers, you need to re-load the relevant code and its structure in memory.

“The concept of providing the whole codebase comes from an analogy of giving an entire book to the LLM and asking it questions, but code is a lot more structured and modular than free text and thus you could likely get away with providing only relevant docs or even just a function signature for the bit of code that needs to be implemented.” - Kamen continues.

Embracing that ability to be modular is one of the ideas used by the GitHub Copilot team already in production, as revealed by Parth Thakkar in his retro-engineering of Copilot (if you care about this topic, this is a must-read).

Part of the secret sauce is the addition of a series of classifiers before the RAG.

“All of the code you’ve written so far, or the code that comes before the cursor in an IDE, is fed to a series of algorithms that decide what parts of the code will be processed by GitHub Copilot.” - Damian Brady, Staff Developer Advocate at GH (here)

Why would GitHub not take advantage of the larger context window models available? Because for Copilot use cases, inference speed is crucial. People are not going to wait a full minute to get basic scaffolding, code completion or unit tests written. They have less than 1 second to fit both inference time and the transport/processing of the request.

Copilot’s need to prioritize fast inference is documented in their press releases over and over, even though between May 2023 and April 2024, LLMs have changed a lot (if this is too much detail for you, skip to the next main part)

MAY 2023: 6000 characters limit

“Right now, transformers that are fast enough to power GitHub Copilot can process about 6,000 characters at a time. While that’s been enough to advance and accelerate tasks like code completion and code change summarization, the limited amount of characters means that not all of a developer’s code can be used as context”

FEBRUARY 2024: adding more context through modularity and code indexing

“GitHub Copilot’s contextual understanding has continuously evolved over time. The first version was only able to consider the file you were working on in your IDE to be contextually relevant. We then expanded the context to neighboring tabs, which are all the open files in your IDE that GitHub Copilot can comb through to find additional context.

Just a year and a half later, we launched GitHub Copilot Enterprise, which uses an organization’s indexed repositories to provide developers with coding assistance that’s customized to their codebases. With GitHub Copilot Enterprise, organizations can tailor GitHub Copilot suggestions in the following ways:

Index their source code repositories in vector databases, which improves semantic search and gives their developers a customized coding experience.

Create knowledge bases, which are Markdown files from a collection of repositories that provide GitHub Copilot with additional context through unstructured data, or data that doesn’t live in a database or spreadsheet.”

The speed lost by the reliance on more context was regained by fine-tuning the “GPT model” in their architecture (initially it was a plain GPT4).

The modularity and gained speed helped them “unlock” higher value propositions like code vulnerability detection.

“CodeQL [GitHub’s semantic analysis engine to find vulnerabilities in code, developed by the acquired startup Semmle] is at the center of this new tool, though (…) it uses “a combination of heuristics and GitHub Copilot APIs” to suggest its fixes. To generate the fixes and their explanations, GitHub uses OpenAI’s GPT-4 model.”

7/ Copilot Workspace vs Devin

In April 2024, GitHub announced Copilot Workspace, which goes even deeper, offering automated pull request generation from natural language queries (wow!) or from automatically detected issues in the code (wow!!!).

Of course, Workspace is barely out now, here is the announcement video so that you can get a good idea of the product

In April, a Founders Fund-backed startup, Cognition Labs, also made a lot of noise by releasing the demos of Devin, their “AI SWE Agent”, which seems to have the same ambition.

Similar to the Copilot Workspace demo, Devin’s interface is a mix between Replit and Chatgpt: you interact with Devin by prompting it, and you can see panels on the side with the terminal and code being written, or even a browser tab when it’s relevant.

Devin uses third-party tools like a Terminal and a web browser, which could unlock higher-tier use cases.

“ From the videos, it seems it’s more of an advanced agent that can switch between certain tools. ” - Gergely Orosz, ex-Uber Engineering manager, in The Pragmatic Engineer

We have limited data but for now, the claim of the automated software engineer made by the Devin team seems very underwhelming (they have also been qualified as “outright plain lies” by some analysts).

from Devin Debunked Reddit thread here

My conclusion after a deep look into these videos, is that they are working on a similar product vision as Copilot Workspace - which is valuable. Unfortunately, they must have felt the need to amplify all their claims for visibility to support their raise. This was likely a double-edged sword as they later raised from …their existing lead investor (the renowned Founders Fund) and no new investor chose to lead the round.

“The interesting thing about Devin is that in its current form, it feels more like a coding assistant that can get some things done. It makes mistakes, needs input, and gets stuck on 6/7 bugs on GitHub. […] It’s more of a copilot. But we already have a co-piloting AI tool; it’s called GitHub Copilot! Microsoft launched it 2.5 years ago, and it became the leading AI coding assistant almost overnight. Today, more than 1.3 million developers pay for it (!!) across 50,000 companies.” - Gergely Orosz, ex-Uber Engineering manager, in The Pragmatic Engineer (here)

A few issues strike me in the Devin demos

1/ From the timestamps in the video, Devin ran for something like 6 hours to obtain the final result. The minimum possible cost of the “upwork” demo is at least 100€ if they use GPT4.

To get to this result of 100€ I took the very reasonable hypothesis that Devin sent one request to gpt4 every 20 seconds for 1500 tokens (3 pages of text, the tabs he had open on the right basically). This is likely very much an underestimate of the true cost (see next part as to why) as Devin is built on an agentic logic. Therefore it’s likely 10x to 100x more if as they say they have an agentic workflow under the hood, which likely uses techniques like Mixture of Agents, or any other method where n agents are being tasked in parallel to try for the same prompt, then the best result is picked, so the end cost is minimum n-times more in the end.

2/ Did I mention offering a solution to the issue took 6 full hours? (we can debate somewhere else if it’s a correct solution)

I hear you, in the back, saying “But Marie don’t be so mean, it’s just a question of engineering and optimization, OpenAI prices will decrease, plus they’ll figure out some optimization tactics, that are likely in their R&D roadmap right now”.

Yeah of course. Unfortunately, just like research on context windows augmentation, these optimization problems are bleeding edge research, and not solved. Devin’s approach, today is as elegant as brute-forcing.

***

That’s it for today folks, would love your feedback at mary@fly.vc,

You can now jump to Part II here

8/ Can we generate software from a prompt?: A discussion about the potential and limitations of AI in generating not anymore “code”, but software from a prompt.

9/ Low-level blockers to the “Midjourney of Software”: Identification of the main obstacles in the path towards the model-centric approach.

10/ Midjourney of Software, option 1: the mega verticalized model: Exploration of a potential solution for AI-generated software drawing from Google Brain's breakthroughs.

11/ Midjourney of Software, option 2: the Hybrid approach: Examination of the hybrid approaches as another potential solution for AI-generated software.

Closing remark: the contenders: Final thoughts on the current leading players in the field of AI-generated software.

=> Take me to Part 2

Love you, thanks for reading!

Marie