LLM in the Enterprise [H1 2024], Part 2: the Buy case

LLMs in the Enterprise - yay, or nay? We looked at the Build case in Part 1:

Use cases: 1. automating tasks, 2. better search, and 3. accelerating content creation.

Enterprises say they prefer to build LLM solutions in-house to control data and costs. Very little reaches production, though bc high inference costs, talent shortages, and misunderstandings of LLM limits and capabilities (especially RAG)

Did we look at all of this from the wrong angle?

After all, Enterprise companies did not develop their own cloud infrastructure (except for Amazon, of course, and… Lidl?). Why should they build their own AI systems?

PART 2: the build case

IV. LLM-based RPA

V. The one we did not see coming, ServiceNow

VI. What about Salesforce and Microsoft?

Conclusion: we’re still in the Stone Age, and it’s ok! + CARTE

IV. LLM-based RPA

An extremely clear application of our bucket 1, Computers don't speak French, is the use of LLMs, notably of their vision capabilities, to empower forms of RPA (robotic process automation). Another common way people use to call this (but I won’t as it cringes me too much) is “AI Agents”.

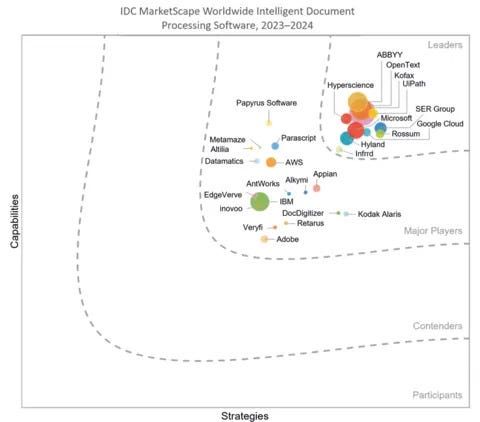

In this category, you also have a million forms of “digitization enablers” to help companies digitize their processes. One adjacent category is document processing (for invoices etc), such as London-based Rossum (raised 100M$ from GC, likely around ~50M$ ARR), NY-based Hyperscience, or incumbents like ABBYY or OpenText.

The state of the art of coupling NLP to vision had limited these technologies' ability to deliver value beyond OCR. Now, thanks to LLMs, they can offer higher-level value propositions.

Abbyy, in particular, announced a 60% YoY growth in 2024 (this was not a usual growth rate for them), and specifically 100% growth in new ARR. Ulf Persson, CEO at ABBYY, explains: "We are augmenting our products and solutions with this transformational technology, […] the benefits from LLMs and generative AI enables us to be a trusted resource for innovation teams needing counsel on how to use both heuristic and transformer-based AI to put their information to work and generate the results they expect.".

In layman’s terms, the very important, ops-heavy “invoice-to-SAP” problem that resisted years of ML engineers is coming to a resolution.

Rossum, one of the new contenders, was founded by three Czech PhDs and already used deep learning for its pipelines, but in its own words, LLMs are a new unlock: “In 2017, we revolutionized the IDP market by introducing the first template-free platform. Today, we're primed to replicate this success with the launch of our specialized Transactional Large Language Model," commented Tomas Gogar, CEO at Rossum. Their new “LLM” engine won them a good spot on their Forrester Quadrant.

So, LLMs helped Document processors build more robust, higher-value use cases and might be killing the game. What about RPA?

UIPath, who created the RPA category and its leader, offers (among other consolidated things) a platform to automate processes that require “clicks” or interfacing with old closed software with no APIs. With an RPA process, you can turn a manual click/human-heavy process (like reporting, analytics, receivables…, etc.) into an automatic process by automatically clicking on the screen, filling forms, using OCR, etc.

System integrators usually design these processes for end customers using the orchestrator, and I can imagine that they were not very robust pipelines as whenever the UIs would change too much, you would have to redo the entire setup of the process. [Side note: UIPath orchestrator tutorials are an excellent way to fight insomnia]

Enters LLM's vision: the AI can now tell you where to click. Suddenly, everyone imagines how cool and more robust RPA could be!

However, unlike its friends ABBYY and Rossum, UIPath did not manage to catch the trend and only released marketing materials and a high-level integration to gpt4 in the orchestrator. The market was unimpressed.

[I’m guessing; don’t quote me] I can imagine Accel, who was one of the early backers and still owns a significant chunk of UIPath (over 10%), was also unhappy with the market cap.

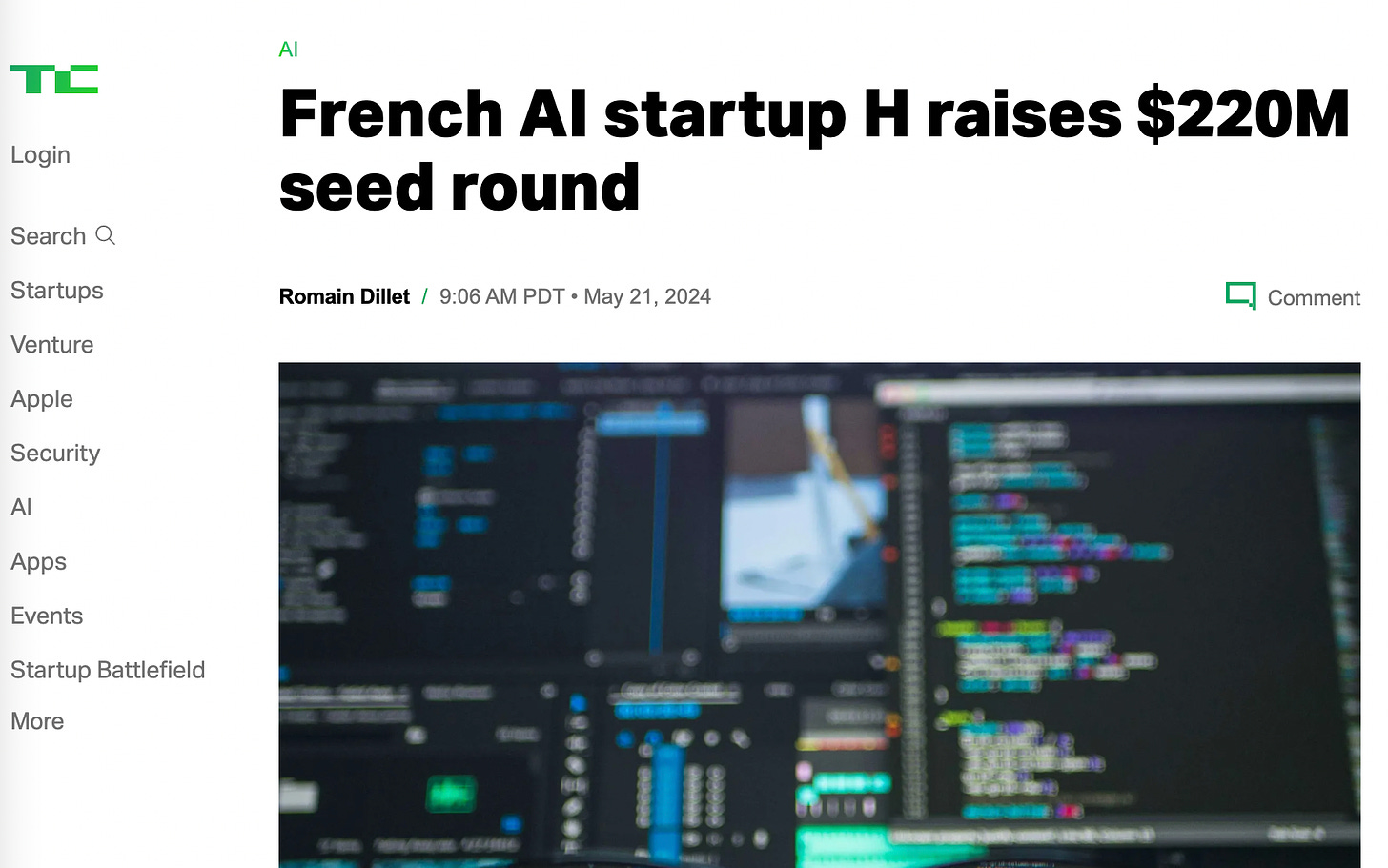

A few months later, this hit the front page of all European tech media.

H. (prev. Holistic) emerged at the right moment with Google Research/Deepmind founders and a $200 million inception round led by Accel, …UiPath, Celonis (another OG RPA company), and other VC funds not deterred by uncapped convertibles.

Et voilà: H’s goal is to develop a large action model to make LLM-based RPA a reality and deliver it to UIPath’s customers through a tight-nit partnership. The upcoming months will tell if UIPath + H delivers on the LLM-based RPA dream of allowing all companies to build their own “Klarna back office.”

Like most venture capital headliners, it’s an excellent show to watch with popcorn. Since the mega round, 3 out of the four scientific co-founders left the company.

The opportunity is real though: more “service-heavy” tech companies like Palantir or Artefact jumped at the opportunity to build automated customer processes and found an immense market pull. Several very strong founding teams are also working on their own approaches to disrupting RPA and process mining (for example, just saying, in London, Deventer, Tallinn, and Paris) - sometimes building in public, such as the open-source project LaVague.

V. ServiceNow

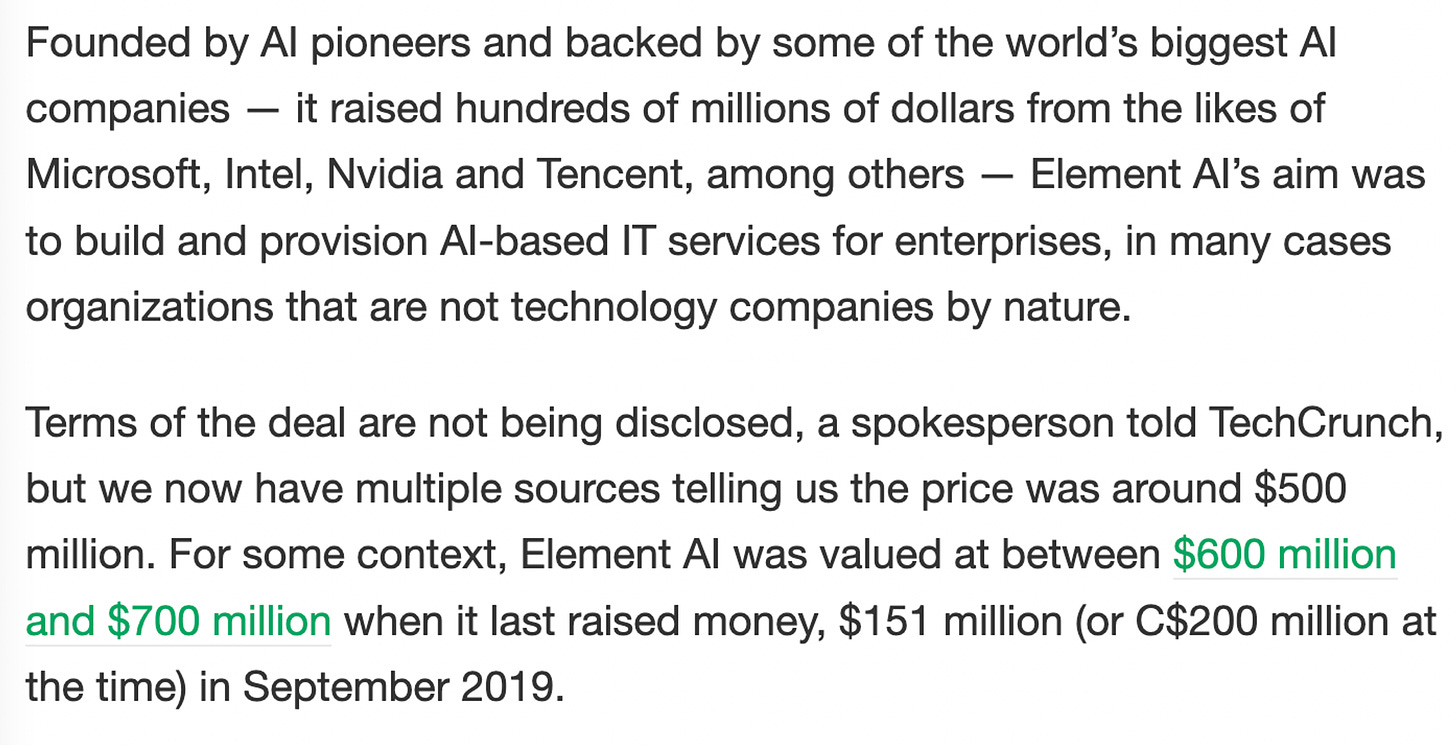

If you’re a real OG AI person, the investment of UIPath in H. must feel very… familiar. In 2020, ServiceNow acquired ElementAI, the company founded by Yoshua Bengio himself.

ServiceNow’s market cap at the time was around 60B$. It was a bold move to invest 500M$ in an acqui-hire as their first major M&A transaction.

If you don’t know ServiceNow, it’s sort of the up-and-coming little brother of Salesforce in terms of a large enterprise SaaS platform, but instead of starting in Sales with a CRM, it started with the IT help desk. Its platform has three main use cases:

IT Service Management Tool: For instance, if you work in a Fortune 500 company and your computer malfunctions, you will create an IT ticket in ServiceNow.

Customer Success Helpdesk: When a customer encounters a problem, the customer success agent will create a ticket in ServiceNow (think Zendesk)

Workflow Tool: linking applications together. For example, when a salesperson joins a company, someone will create their HR profile in Workday. ServiceNow will then use this information to create their Salesforce account.

Since 2020, ServiceNow has grown 2.5x from 4 to 10B$ ARR and still has grown 25% YoY in 2024. The tier one AI team, created from the ElementAI acquisition, delivered on many fronts:

an impressive collaboration with Huggingface called BigCode, that led to the training of one of the best code generation model families (StarCoder)

a bunch of AI-powered internal AI tools and improvements, customer-facing or not

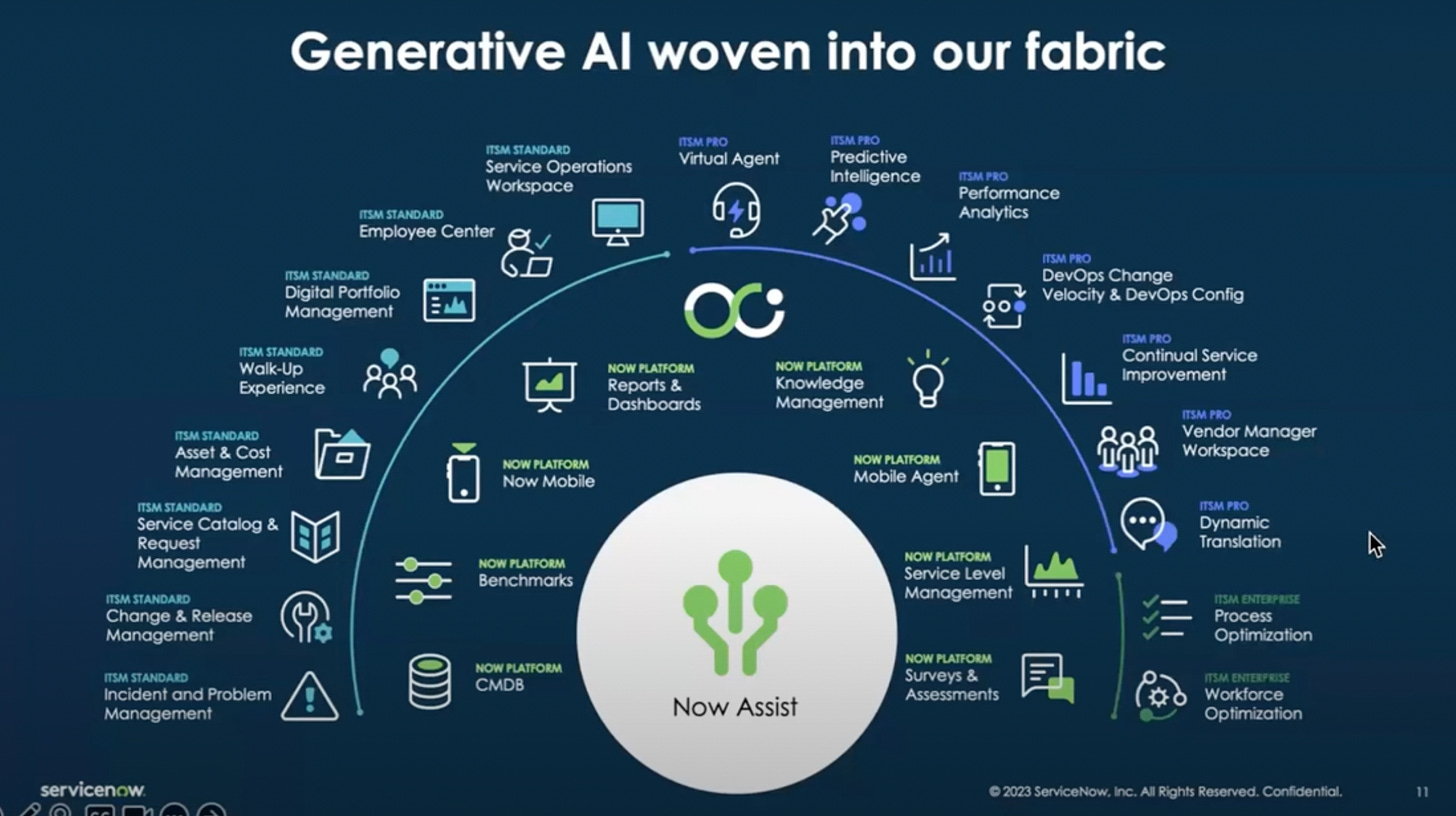

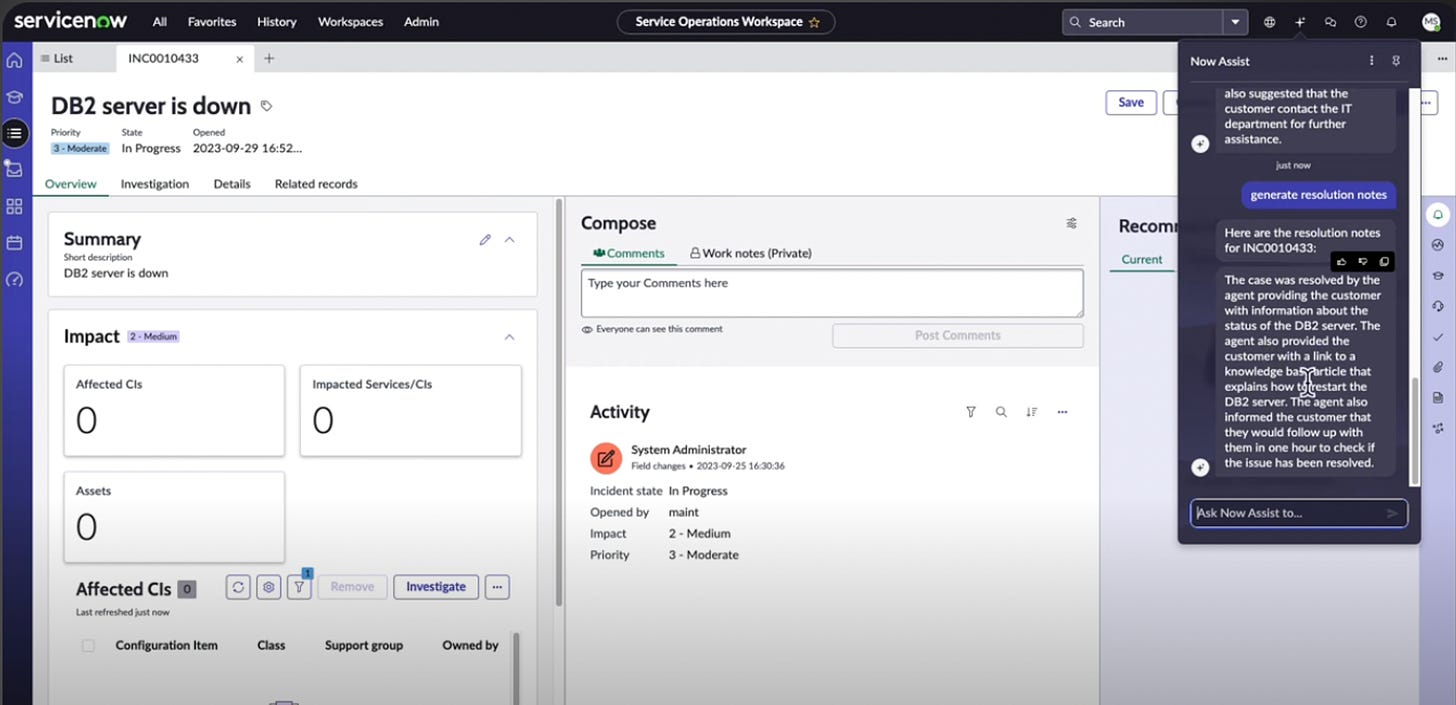

A smart chatbot, “Now Assist,” was released in Nov. 2023. You can think about it as a Copilot-Chatbot for all the functions supported by ServiceNow

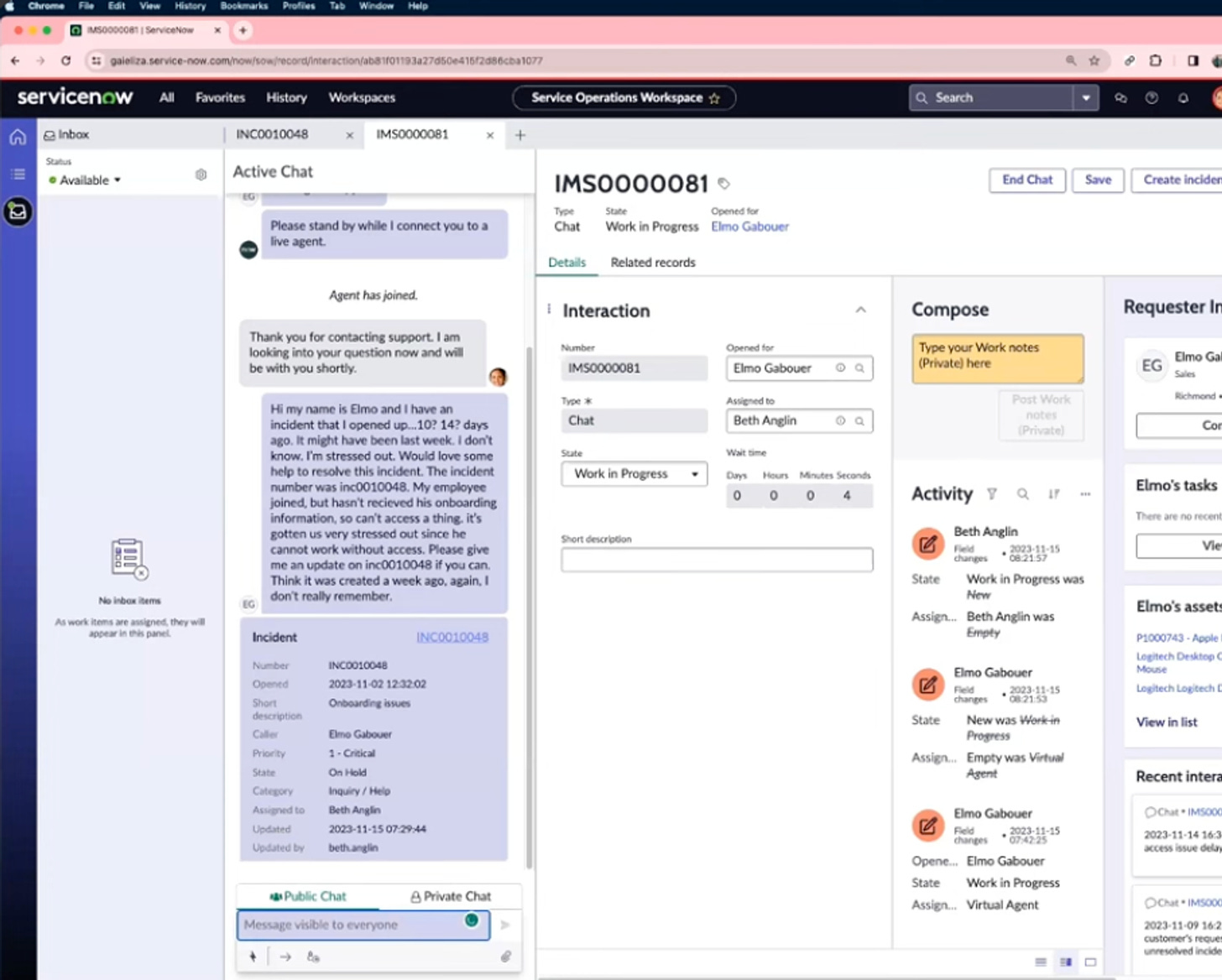

Here, you can see Now Assistant automatically turning the very verbose customer message into a structured incident.

Here, you see it generating resolution notes for a technical incident.

Although it does not “look like much” or look very disruptive given all the things that maybe could have been produced if ServiceNow had been open to rethinking part of its offering completely around generative AI, ServiceNow still shipped a pan-platform Copilot and stands today as one of the few Enterprise Software vendors that truly managed to integrate LLMs into its product and create value from it.

Now Assist, as you can imagine, is available only on ServiceNow’s most expensive plans.

“GenAI products drove the largest net new annual contract value contribution in the first full quarter of any of our new product family releases ever,” - ServiceNow CFO Gina Mastantuono in a Forbes interview

It makes sense. If it’s not the most “groundbreaking” way to use LLMs, it’s a fairly approachable way for Enterprise companies to get productivity from LLMs for their customer support / internal processes and take zero execution risk.

In that same Forbes interview, the Chief Commercial Officers shared a customer case about a software company using ServiceNow to manage its complex customer service:

“They did OpenAI to summarize prior customer calls during their pilot phase. They do not want to share proprietary company information, So, they are ingesting their customer call information into Now Assist and the data is not leaving the company. They are getting a 30% reduction in call resolution time.”

Circling back to our Part 1: the customer did a POC with OpenAI, and now the deployment is a business case with ServiceNow, not an internal pipeline.

VI. What about Salesforce and Microsoft, then?

“Naturally, there is a race between existing systems of record and workflow solutions trying to embed AI-augmented capabilities and new solutions that are AI-native.” - A16Z.

Salesforce’s Einstein Copilot, the equivalent to Now Assist, was launched in public beta in February 2024 for Sales and Service Cloud. It is available on the priciest tier of Salesforce, “Einstein 1”, for $500 per user per month. For comparison, Gong.io costs c. $100-$200 per month, while normal Salesforce costs c. $165 per user per month. This price tier also includes Datacloud and Revenue Intelligence. Additionally, you can also add the Copilot to your plan for +$75.

They launched it for everyone in April.

From what I've gathered through my connections in the Salesforce ecosystem, despite all marketing efforts, the adoption hasn't been crazy, and the business model might also not be adapted for everyone.

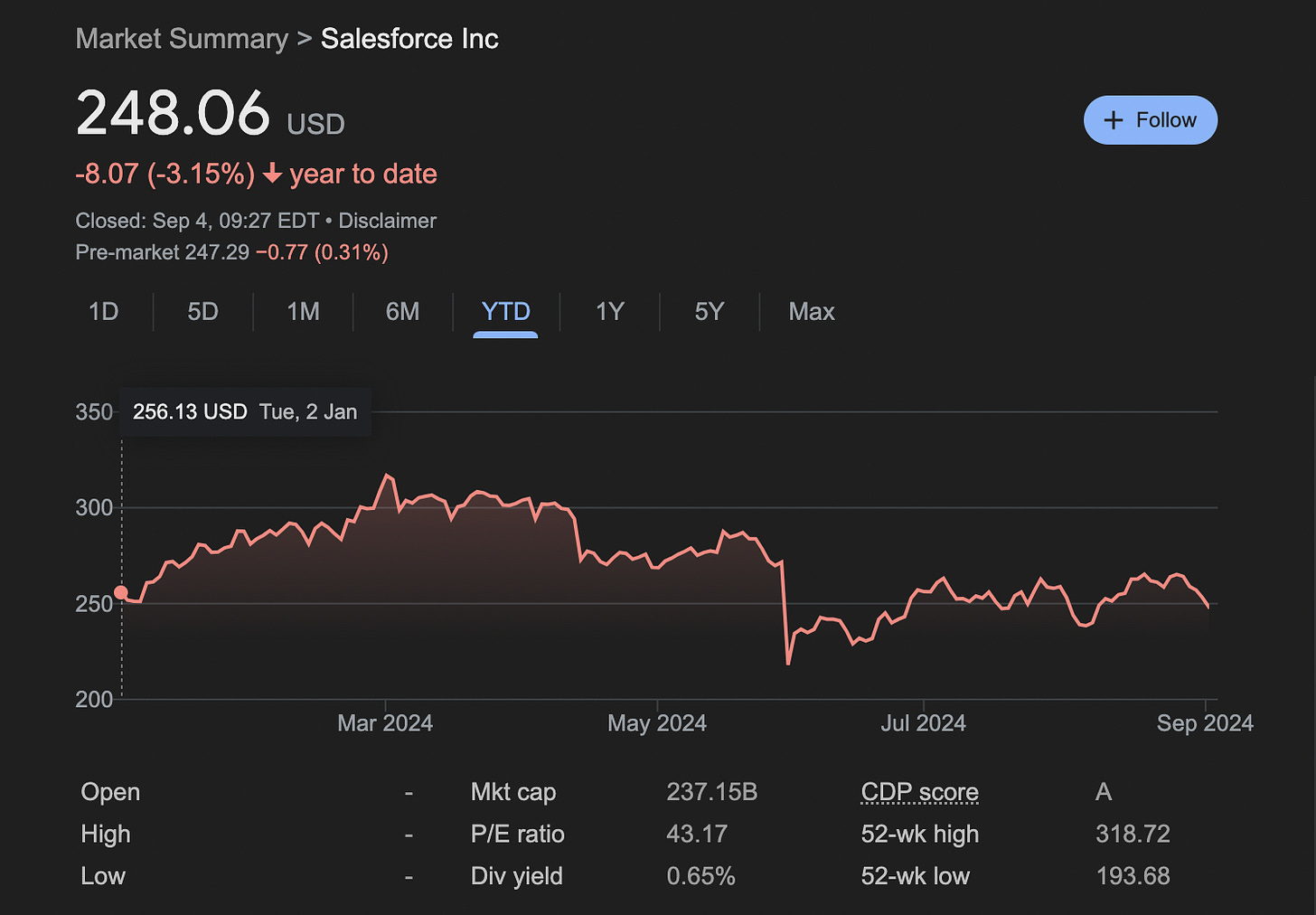

In the end, contrary to ServiceNow’s +18% YoY growth in share price and 23% YoY quarterly revenue growth, Salesforce did 8% YoY revenue quarterly growth and… 0% share price growth at a relatively similar market cap (230B$ for Salesforce and 170 for ServiceNow). Is it all GenAI? Probably not. However, Salesforce was punished for not delivering on its many (many) AI promises.

What about Microsoft?

Microsoft seemed so inspired by ServiceNow that it copied the entire playbook.

Like ServiceNow, it poached the tier-one AI talent founders of Inflection AI and generously gave back 0.5x to investors.

They created an entire OS based on the same companion chatbot idea

Unlike ServiceNow, however, people have been evaluating Office 360 Copilot’s added productivity to “about 1H a week,” and the market feedback is mixed.

Conclusion: we’re still in the Stone Age, and it’s ok!

If you concluded from my very long 2 part rant that this market is a hype bubble and you are better off doing nothing than trying to find viable Enterprise use cases, hold on! It’s not that simple. [if you concluded that you should short Nvidia from part one and made a fortune last Tuesday, send me a little <3]

Despite the considerable VC investments in the picks and shovels and the massive investment by the giant tech platforms to create new AI-powered products, we are super early into this journey—so early that I have to combine ‘cool’ and ‘NowAssist’ in the same sentence.

TLDR: LLM in the Enterprise is in the pilot phase for now, and the very high expectations of AI doing complex stuff face scientific and technical blockers before being delivered.

A VP Data Science at a major French bank summarized it to me: “We’re well aware that it mostly does not work. We’re keeping the team up to date by doing pilots, but we don’t plan to do anything before the science works.”

Cool science is indeed still in the making. Scientific breakthroughs are found every month! The frontlines of science change every week in the LLM world and in the broader ML/AI world.

As an illustration, I’ll leave you with an overview of one of the very cool stuff being brewed in the academic world. Specifically, the digest of a recent (as of May 24) article.

Remember when I said LLMs can’t deal with Excel files, and it’s a huge problem? Well… Meet 🇫🇷 CARTE - a super elegant way to pre-train neural networks with tabular data.

We owe this milestone to Gaël Varoquaux, Research Director at INRIA. Gaël is the founder of scikit learn (yes, THE scikit learn), and was notably a PhD advisor of Arthur Mensch of Mistral. Varoquaux and his team developed a method to vectorize tabular data BUT retain the “metainformation” of the table to make use of it in a vectorized form. (Please don’t beat me with a stick for oversimplifying this, I’m doing my best).

Why do we care? Today, 75% of the value derived from data science is created by “forecasting business things” based on tabular data. Deep learning methods have been found wanting when compared to classic data science techniques such as tree-based techniques (e.g., XGBoost). They simply have been inferior in terms of performance.

Just a month before, an excellent Amazon team (who powers Amazon’s bottom line with their kickass time series prediction engines) published an entire meta-review that basically says “Nope, nothing to see here, neural networks x Tabular data sucks” [descriptors are my own]

Other very excellent people from many different universities also tried to do univariate time series prediction, with mixed results.

However, Varoquaux's innovative approach might change all of this. His method involves representing data in a graph where each leaf represents a piece of data. The branches are labeled with the column, and all aspects of the graph are vectorized. The resulting graph node then becomes the average of the vectors.

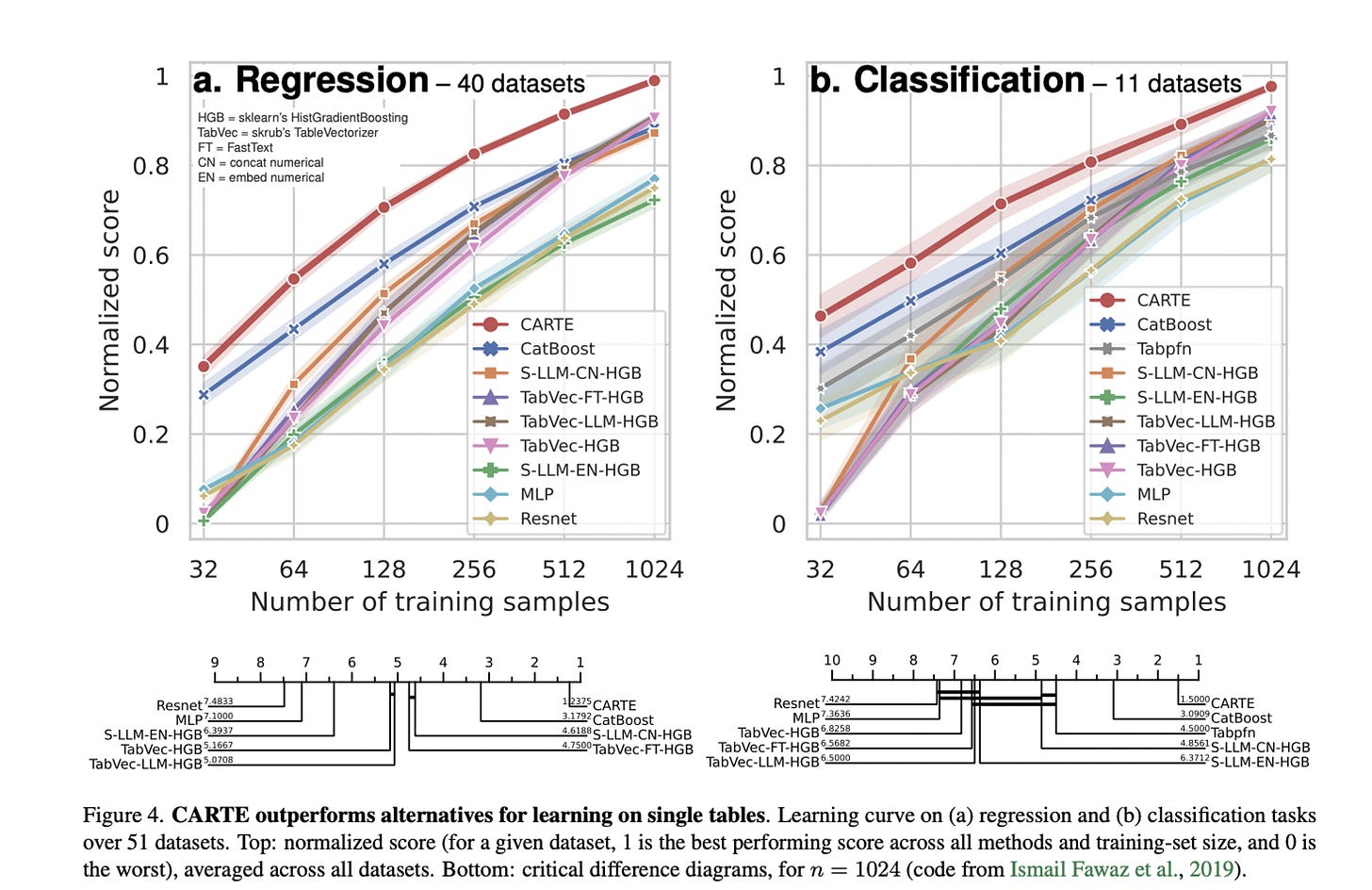

This approach is incredibly elegant in its simplicity yet profound in its potential. When used for prediction, it performs better than traditional data science, at least in the context of one table.

Here from the article:

This methodology, scaled on a very large number of datasets (only 50 or so public data sets were used in the article to fill the vector space), potentially allows us to utilize tabular data to pre-train a larger model—a large tabular model (LTM). There are transfer learning industry consortiums out there created just for this opportunity, such as EU’s GaiaX, whose purpose is exactly this, and whose data could power a gigantic LTM.

***

I hope you enjoyed this piece! If you haven't subscribed yet and want to hear in the next edition why RAG is s***…

Marie